Teaching:TUW - UE InfoVis WS 2005/06 - Gruppe G3 - Aufgabe 3

Topic: Webserver Logfile Visualization

Application Area Analysis

The logfiles webservers create, save information about every single request brought forward to the webserver. Data about the remotehost, date and time of the request, the request itself as well as a status report and the file size are only some of the data saved. Such logfiles can be enormous in size if the related webpage is visited often. The data stored in the logfiles contains valuable information about the visitors of the webpage, where they click on the page, from where they come from and indirect what they think is interesting or not. As mentioned below, this information can be used by Administrators, Web users, Web designers, Advertising companies, Software companies, Security centres and many more. Each of them has different interests, which often can be matched with data from the webserver logfiles. However, the data needs to be prepared according to the target group.

So far the webservers deliver huge textfiles where the datasets are separated by blanks. There are several different statistical tools which can filter the data and draw graphs and diagrams out of the logfiles. However, Most of them barely utilize the potential of the information given.[6][Duerstler, 2005] and [7][Aigner, 2005]

Dataset Analysis

The Dataset we are analysing is a Webserver Logfile; Specifically an Apache Webserver Access Logfile. Originally there as been no commonly accepted standard for logfiles which made statistics, comparison and visualisation of logfile data very complicated [1][W3C]. Today there exist several different defined logfile standards. Two important formats shall be described below.

The Common Logfile Format

According to the World Wide Web Consortium [1][W3C] the Common Logfile Format is as follows:

remotehost rfc931 authuser [date] "request" status bytes

| remotehost: | Remote hostname (or IP number if DNS hostname is not available, or if DNSLookup is Off. |

| remotehost is a one-dimensional discreet datatype. The IP Adress however carries different kinds of information. For a detailed describtion see Wikipedia. | |

| rfc931: | The remote logname of the user. |

| rfc931 is a one-dimensional discreet datatype. | |

| authuser: | The username as which the user has authenticated himself. |

| authuser is a one-dimensional discreet datatype. | |

| [date]: | Date and time of the request. |

| date has seven dimensions in the following format: [day/month/year:hour:minute:second zone] Day: ordinal, 2 digits | |

| "request": | The request line exactly as it came from the client. |

| request has three dimensions in the following format: "request method /filename HTTP/version] The request method is nominal, there are eight defined methods. | |

| status: | The HTTP Status Code returned to the client. |

| status is a one-dimensional nominal datatype. Here you will find a description of the HTTP Status Code classes. | |

| bytes: | The content-length of the document transferred. |

| bytes is a one-dimensional ordinal datatype. |

Combined Logfile Format

The Combined Logfile Format adds two further Positions to the Common Logfile Format (see [2][Apache]):

| referer: | This gives the site that the client reports having been referred from. |

| referer is a one-dimensional discreet datatype. | |

| agent: | The User-Agent HTTP request header. This is the identifying information that the client browser reports about itself. |

| agent is a one-dimensional discreet datatype. |

One entry in the Combined Logfile Format looks as follows:

remotehost rfc931 authuser [date] "request" status bytes "referer" "agent"

Example Data

The example data we will use for the prototype uses the Combined Logfile Format. One example entry in this file looks as follows:

128.131.167.8 - - [16/Oct/2005:09:56:22 +0200] "GET /skins/monobook/external.png HTTP/1.1"

200 1178 "http://www.infovis-wiki.net/index.php/Main_Page" "Mozilla/4.0

(compatible; MSIE 6.0; Windows NT 5.0)"

remotehost: 128.131.167.8

rfc931: -

authuser: -

[date]: [16/Oct/2005:09:56:22 +0200]

"request": "GET /skins/monobook/external.png HTTP/1.1"

status: 200

bytes: 1178

Referer: "http://www.infovis-wiki.net/index.php/Main_Page"

Agent: "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.0)"

The whole example data file can be downloaded here.

Target Group Analysis

Target Groups of Visualization

The usability of visualization in simulation includes visual processing in both static and dynamic form. The simulation database, the experiment process or its results are represented in a static form, e. g. as tables or diagrams. Also interaction with the simulation model and the direct manipulation of the model take place by using a graphical representation. The dynamic illustration of a process by animation makes it easier to understand complex issues. Therefore, the user interface and the visual aspect of our project will be developed and implemented using flash and XML. We think that, it will be better to understand for each user the predictable representations of given data by audio-visual design. We have identified the following target groups:

- Administrators

- Web users

- Web designers

- Advertising companies

- Software companies (who developed browsers and web based applications)

- Security centers

But we will focus on the target group of Administrators or owner of the web site. The Visualization should give the target group the ability to see which parts of their web site is more often visited.

Special Interests of Target Groups

Each log contains different types of logs i.e. Errors, warnings, information, success audit and failure audits. Therefore the visualization of the log file is in each case for each target group different. The website administrators are interested in the popularity and/or usability of certain pages or areas of their website. In the other case, the visualization of logfiles provides information for illegal proceedings. The logfiles may be useful for advertising companies Such as; How many visitors came in a certain period on the web page? From where the visitors came? Which search words have been found or not found? Which pages have been looked at? What is the IP-number of visitor and from which country is he?..

Known Solutions / Methods

- Webtracer (The Webtracer uses a wide range of protocols and databases to retrieve all information on a resource on the internet, such as a domain name, an e-mail address, an IP address, a server name or a web address (URL). The relations between resources are displayed in a tree, allowing recursive analysis.)

- Conetree(Cone trees are 3D interactive visualizations of hierarchically structured information. Each sub-tree associated to a cone; the vertex at the root of the sub-tree is placed at the apex of the cone and its children are arranged around the base cone. Text can be added to give more information about a node(children of the sub-tree)

- Matrix-Visualization(There are several alternative ways for visualizing the links and demand matrices.)

- Hyperspace-View(A graphical view of the hyperspace emerging from a document depicted a tree structure.)

- The Sugiyama algorithm&layout(Sugiyama algorithm draws directed acyclic graphs meeting the basic aesthetic cri-teria, which is very suitable to describe hierarchically temporal relationships amongworkflow entities. It can make visualisation of the workflow cleaner and find the best structure for the hierarchical type of information representation. Sugiyama-layout has more benefits in more complex projects.)[8]

Aim of the Visualization

The Goals of Visualization

The visualization of the logfile is intended to:

- alert you to suspicious activity that requires further investigation

- determine the extent of an intruder's activity (if anything has been added, deleted, modified, lost, or stolen)

- help you recover your systems

- provide information required for legal proceedings

- draw conclusions about the popularity and/or usability of certain pages or areas of the site.

Designproposal

We have derived four parameters of capital import.

- the IP adress

- the time

- the requested page

- the referer page

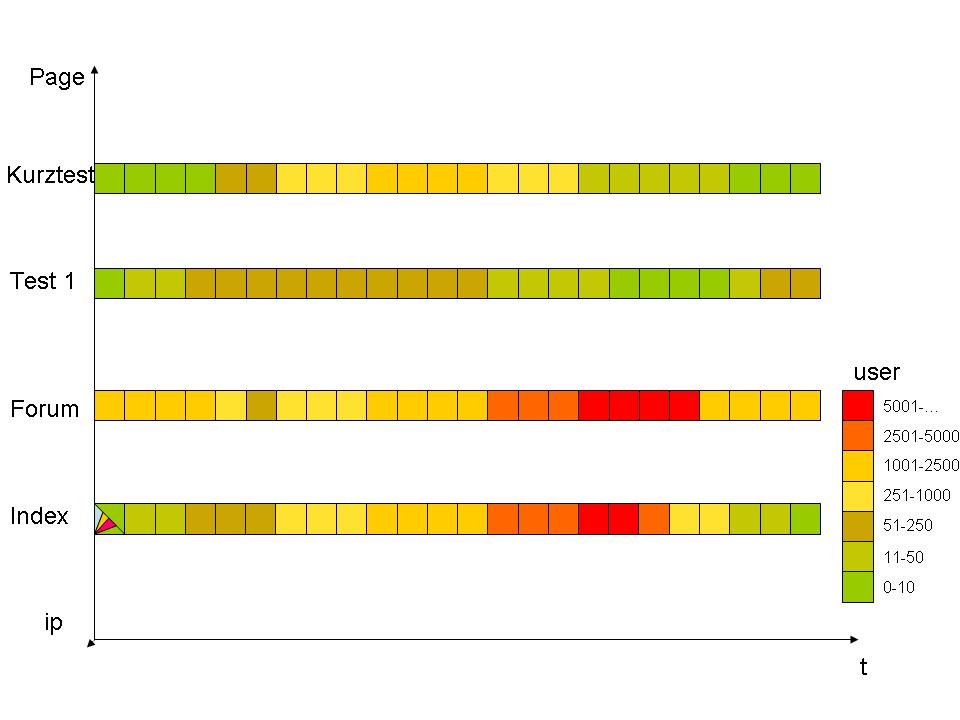

Our first approach was to visualize this data via a scatter plott:

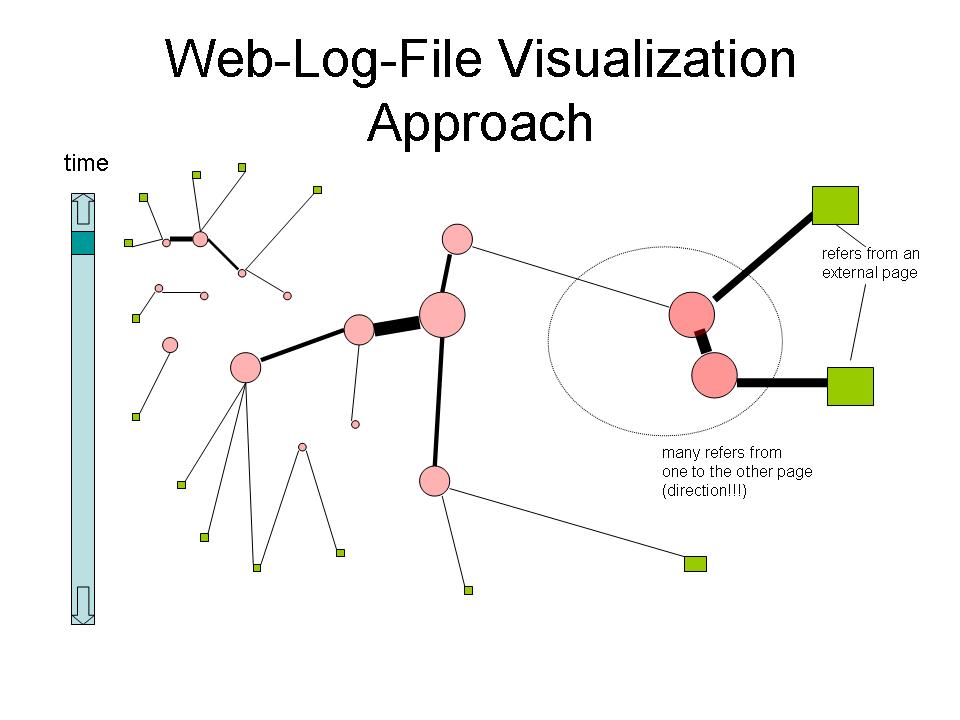

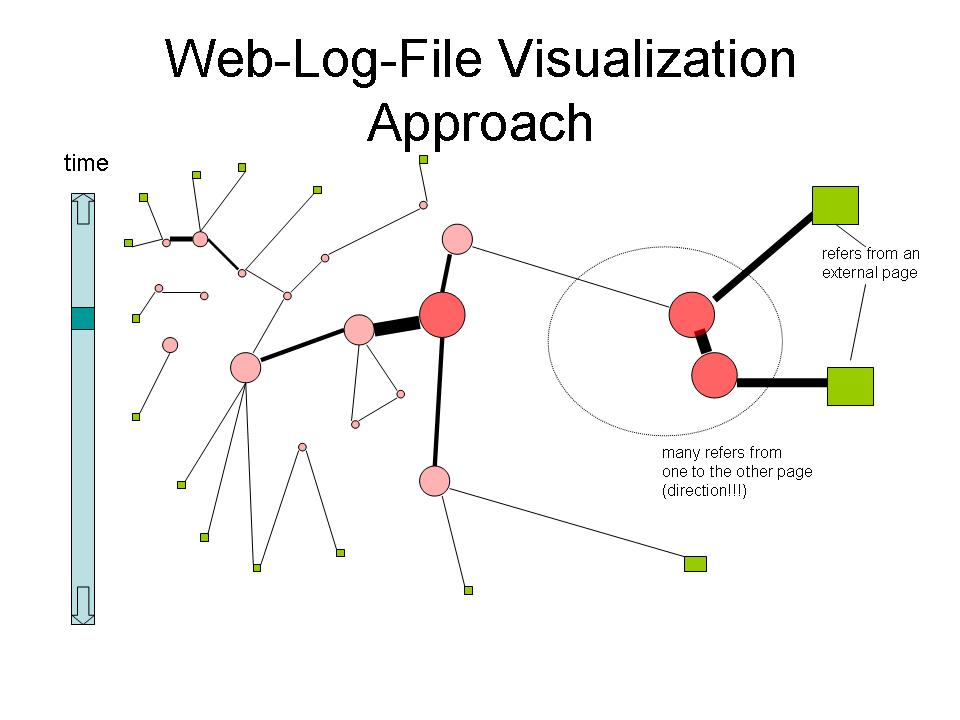

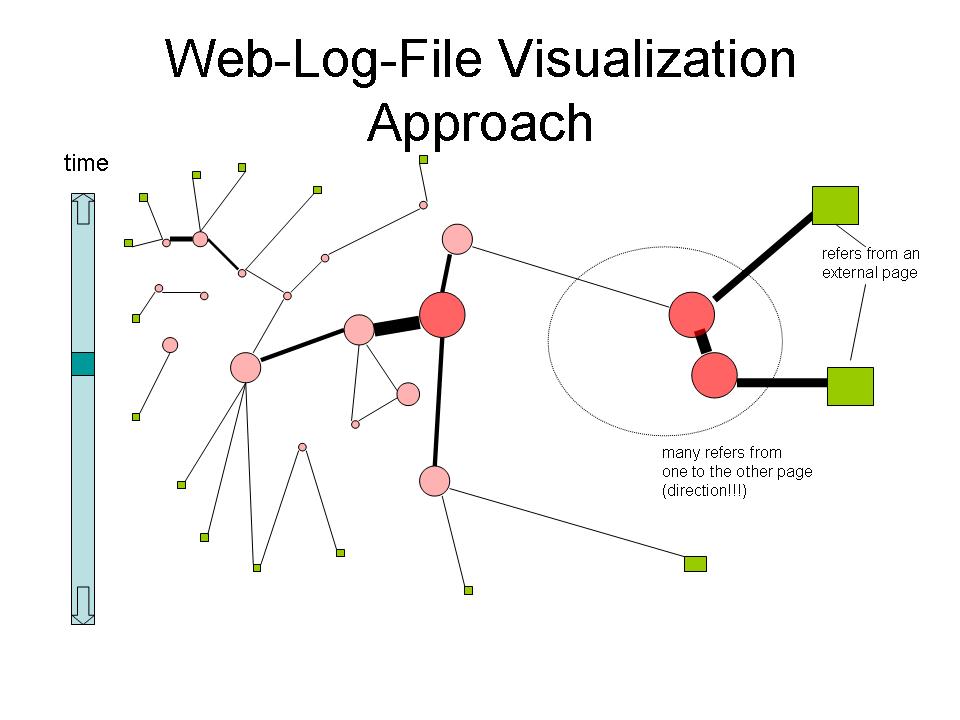

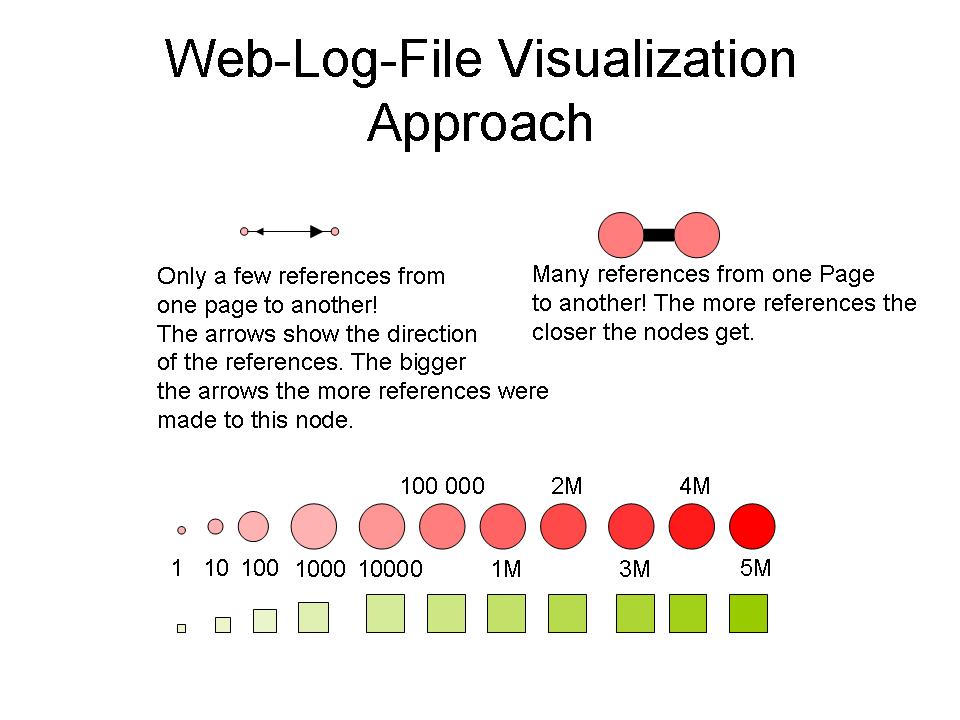

Our second approach was to visualize the access of the pages as a kind of notwork.

- Every Page is a node

- The node size and colour depends on the amount of visitors (clients which have requested this page)

- References from external are represented with green squares and a line to the referred Page.

- References between pages are represented with a line

- The thickness and the closeness of the line and nodes represent the amount of references between those pages.

References

[1][W3C] World Wide Web Consortium, Logging Control In W3C httpd. Created at: July, 1995. Retrieved at: November 16, 2005. http://www.w3.org/Daemon/User/Config/Logging.html#common-logfile-format.

[2][Apache] The Apache Software Foundation, Apache HTTP Server: Log files. Retrieved at: November 16, 2005. http://httpd.apache.org/docs/1.3/logs.html

[3][Gershon et al., 1995] Nahum Gershon, Steve Eick, Information Visualization Processdings Atlanta, First Edition, IEEE Computer Society Press, October 1995.

[4][Kreuseler et al., 1999] Matthias Kreuseler, Heidrun Schumann, David S. Elbert et al., Work Shop on New Paradigms in Information Visualization and Manipulation, First Edition, ACM Press, November 1999.

[5][WebTracer] http://forensic.to/webhome/jsavage/www.forensictracer%5B1%5D

[6][Duersteler, 2005] Juan C. Duersteler, Logfile Analysis. InfoVis.net Magazine, Created at: Oktober 17, 2005. Retrieved at: November 20, 2005. http://www.infovis.net/printMag.php?num=174&lang=2

[7][Aigner, 2005] Wolfgang Aigner, Webserver Logfile Visualization. Created at: November 2, 2005. Retrieved at: November 20, 2005. http://asgaard.tuwien.ac.at/%7Eaigner/teaching/infovis_ue/infovis_ue_aufgabe3_webserverLogVis.html

[8][Gutwenger, 2000] Carsten Gutwenger, Sugiyama's Algorithm ( SugiyamaLayout ) Created at: Feb. 2, 2000. Retrieved at: November 28, 2005 http://www.ads.tuwien.ac.at/AGD/MANUAL/SugiyamaLayout.html