Visual Analytics

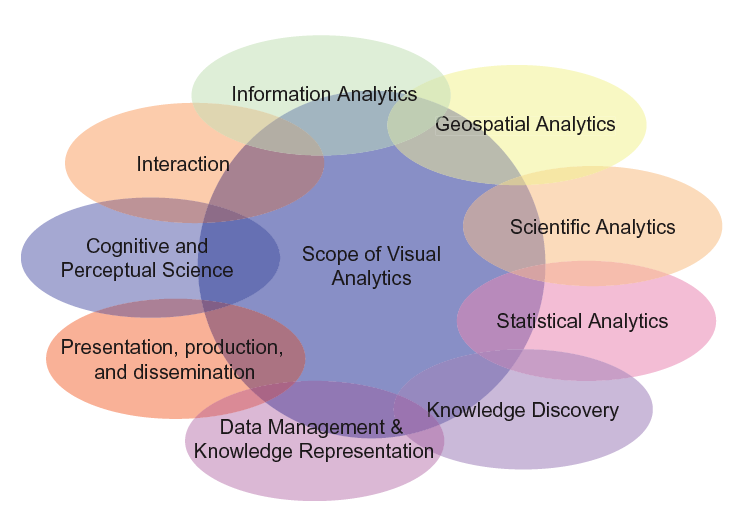

Visual analytics is a multidisciplinary field that includes the following focus areas:

- analytical reasoning techniques that let users obtain deep insights that directly support assessment, planning, and decision making;

- visual representations and interaction techniques that exploit the human eye’s broad bandwidth pathway into the mind to let users see, explore, and understand large amounts of information simultaneously;

- data representations and transformations that convert all types of conflicting and dynamic data in ways that support visualization and analysis; and

- techniques to support production, presentation, and dissemination of analytical results to communicate information in the appropriate context to a variety of audiences.

[Keim et al., 2006]

This marriage of computation, visual representation, and interactive thinking supports intensive analysis. The goal is not only to permit users to detect expected events, such as might be predicted by models, but also to help users discover the unexpected—the surprising anomalies, changes, patterns, and relationships that are then examined and assessed to develop new insight.

Related Links

- Visual Analytics Digital Library

- SEMVAST Scientific Evaluation Methods for Visual Analytics Science and Technology - Resource on Visual Analytics Evaluation

Visual Analytics @ YouTube

- March 15, 2010 — VisMaster - Mastering the Information Age What is Visual Analytics and why is it important for Europe to fund Research in this new domain?

- December 16, 2008 — Jigsaw Using visualization and visual analytics to help investigative analysis and sensemaking.

- November 03, 2008 — Mikael Jern on Visual Analytics Visual Analytics Center, OECD Explorer

- July 10, 2007 — Why Visual Analytics? - A conversation A series of interviews to some of most known people in Visual Analytics, the science of reasoning through visualization

- December 12, 2006 — Visual Analytics Show segment from "BusinessNOW" on Maryland Public Television

Basic Literature

Books

- J.J. Thomas and K.A. Cook (Eds.), Illuminating the Path: The Research and Development Agenda for Visual Analytics. IEEE Press, 2005.

- Daniel Keim, Jörn Kohlhammer, Geoffrey Ellis and Florian Mansmann (Eds.), Mastering the Information Age – Solving Problems with Visual Analytics, Eurographics Association, 2010.

Papers

- J.J. Thomas and K.A. Cook, "A Visual Analytics Agenda," IEEE Computer Graphics & Applications, vol. 26, pp. 10-13, 2006.

- Pak Chung Wong and J. Thomas, "Visual Analytics," IEEE Computer Graphics & Applications, vol. 24, pp. 20-21, 2004.

- Daniel Keim, Gennady Andrienko, Jean-Daniel Fekete, Carsten Görg, Jörn Kohlhammer, and Guy Melancon. 2008. "Visual Analytics: Definition, Process, and Challenges." In Information Visualization, Andreas Kerren, John T. Stasko, Jean-Daniel Fekete, and Chris North (Eds.). Lecture Notes In Computer Science, Vol. 4950. Springer-Verlag, Berlin, Heidelberg 154-175

- J. Thomas, "Visual analytics: a grand challenge in science: turning information overload into the opportunity of the decade," Journal of Computing Sciences in Colleges, vol. 23, pp. 5-6, 2007.

Hi BobI don't think anybody (who knew of them in the first place) will have foetgtorn standards bodies like W3C or OASIS. Indeed for those of who work with XML, the W3C is of course the central source of most of the key specifications.Surely though quality is not an automatic facet of any particular body's work, but varies according to many factors: the people, the time, the politics, etc. So while W3C has given us some great technologies (XML 1.0, XSLT, MathML, and SVG to name but four) it has also given us some stinkers (e.g. XML Schema, the whole WS-* stack, and XML 1.1).I think it's interesting that often the blame for stinkiness can be traced squarely back to vendor influence. To take one tiny example: why did the W3C decide to count the (previously forbidden) NEL character as a line feed in XML 1.1, other than for reasons of compatibility with legacy IBM systems which (practically alone of their competitors) made use of this character? This was one of the disastrous moves that made such XML 1.1 instances incompatible with the entire installed base of XML 1.0 processors.XML 1.0 (which I think of as a clean, well-written spec) has attracted over 200 errata in its lifetime. At around 40 pages that's 5 errors per page. Do you think certain recent high-profile ISO/IEC standards are significantly more faulty than that?When you mention procurement, I take it you mean the procurement by nations. The major factor here is surely that nations lean towards international standards because they are international, not necessarily because of perceptions of superior quality. Being international means that they (the nations) ultimately can control the standardisation process. Vendor-driven consortiums perform a different function and are valued at a lesser worth accordingly: it's not technical, it's political.And if laws are to be re-visited and standards bodies judged, who is going to be doing the re-visiting and the judging? Ultimately it is a precept of international standardisation that the sovereign nations order their own affairs and yes sometimes this means vendors get upset. Ultimately we (the users) need the nations as they are the only entities powerful enough to bring today's huge corporations to heel. - Alex.